Learning-based scene representations such as neural radiance fields or light

field networks, that rely on fitting a scene model to image observations, commonly

encounter challenges in the presence of inconsistencies within the images caused by

occlusions, inaccurately estimated camera parameters or effects like lens flare. To

address this challenge, we introduce RANdom RAy Consensus (RANRAC), an efficient

approach to eliminate the effect of inconsistent data, thereby taking inspiration from

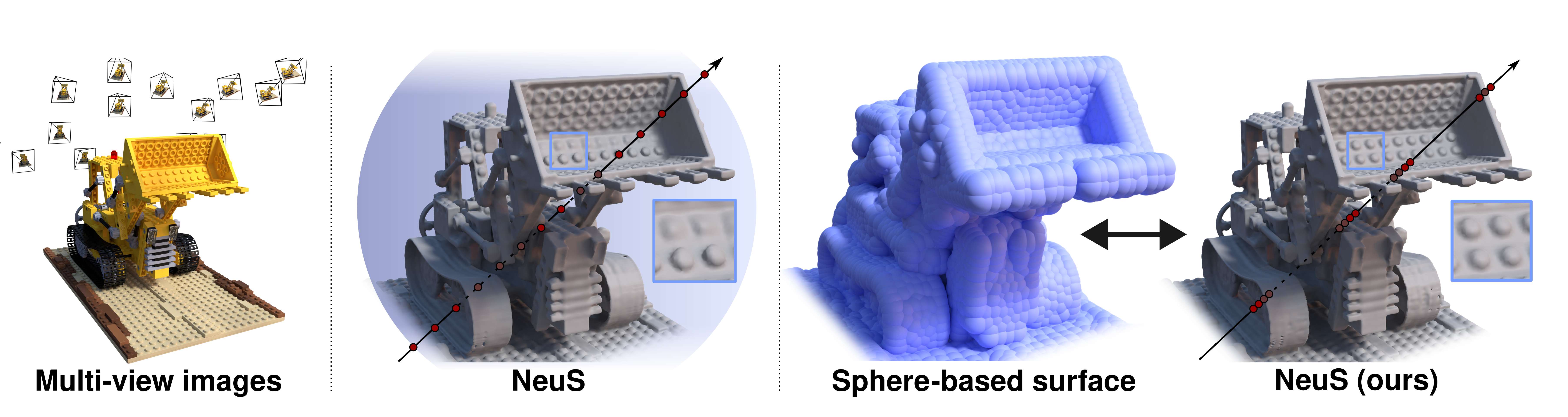

classical RANSAC based outlier detection for model fitting. In contrast to the

down-weighting of the effect of outliers based on robust loss formulations, our approach

reliably detects and excludes inconsistent perspectives, resulting in clean images

without floating artifacts. For this purpose, we formulate a fuzzy adaption of the

RANSAC paradigm, enabling its application to large scale models. We interpret the

minimal number of samples to determine the model parameters as a tunable

hyperparameter, investigate the generation of hypotheses with data-driven models, and analyse

the validation of hypotheses in noisy environments. We demonstrate the

compatibility and potential of our solution for both photo-realistic robust multi-view

reconstruction from real-world images based on neural radiance fields and for single-shot

reconstruction based on light-field networks. In particular, the results indicate significant

improvements compared to state-of-the-art robust methods for novel-view synthesis on both

synthetic and captured scenes with various inconsistencies including occlusions, noisy

camera pose estimates, and unfocused perspectives. The results further indicate

significant improvements for single-shot reconstruction from occluded images.